Getty Photos

Getty PhotosApple is dealing with a recent name to withdraw its controversial synthetic intelligence (AI) characteristic that has generated inaccurate information alerts on its newest iPhones.

The product is supposed to summarise breaking information notifications however has in some situations invented totally false claims.

The BBC first complained to the tech large about its journalism being misrepresented in December however Apple didn’t reply till Monday this week, when it mentioned it was working to make clear that summaries had been AI-generated.

Alan Rusbridger, the previous editor of the Guardian, informed the BBC Apple wanted to go additional and pull a product he mentioned was “clearly not prepared.”

He added the expertise was “uncontrolled” and posed a substantial misinformation threat.

“Belief in information is low sufficient already with out large American firms coming in and utilizing it as a sort of take a look at product,” he informed the Right this moment programme, on BBC Radio 4.

Sequence of errors

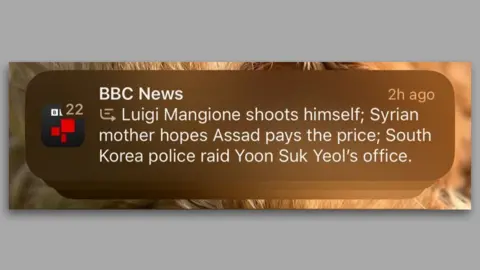

The BBC complained final month after an AI-generated abstract of its headline falsely informed some readers that Luigi Mangione, the person accused of killing UnitedHealthcare CEO Brian Thompson, had shot himself.

On Friday, Apple’s AI inaccurately summarised BBC app notifications to claim that Luke Littler had won the PDC World Darts Championship hours earlier than it started – and that the Spanish tennis star Rafael Nadal had come out as homosexual.

This marks the primary time Apple has formally responded to the issues voiced by the BBC concerning the errors, which seem as if they’re coming from throughout the organisation’s app.

“These AI summarisations by Apple don’t replicate – and in some circumstances utterly contradict – the unique BBC content material,” the BBC mentioned on Monday.

“It’s important that Apple urgently addresses these points because the accuracy of our information is crucial in sustaining belief.”

The BBC will not be the one information organisation affected.

In November, a ProPublica journalist highlighted faulty Apple AI summaries of alerts from the New York Instances app suggesting it had reported that Israel’s Prime Minister Benjamin Netanyahu had been arrested.

An extra, inaccurate abstract of a New York Instances story seems to have been revealed on January 6, referring to the fourth anniversary of the Capitol riots.

The New York Instances has declined to remark.

Reporters With out Borders, an organisation representing the rights and pursuits of journalists, called on Apple to disable the feature in December.

It mentioned the attribution of a false headline about Mr Mangione to the BBC confirmed “generative AI providers are nonetheless too immature to provide dependable data for the general public”.

BBC Information

BBC InformationApple mentioned its replace would arrive “within the coming weeks”.

It has previously said its notification summaries – which group collectively and rewrite previews of a number of latest app notifications right into a single alert on customers’ lock screens – purpose to permit customers to “scan for key particulars”.

“Apple Intelligence options are in beta and we’re repeatedly making enhancements with the assistance of consumer suggestions,” the corporate mentioned in a press release on Monday, including that receiving the summaries is non-obligatory.

“A software program replace within the coming weeks will additional make clear when the textual content being displayed is summarization supplied by Apple Intelligence. We encourage customers to report a priority in the event that they view an surprising notification abstract.”

The characteristic, along with others released as part of its broader suite of AI tools was rolled out within the UK in December. It’s only out there on its iPhone 16 fashions, iPhone 15 Professional and Professional Max handsets working iOS 18.1 and above, in addition to on some iPads and Macs.

Apple will not be alone in having rolled out generative AI instruments that may create textual content, pictures and extra content material when prompted by customers – however with various outcomes.

Google’s AI overviews characteristic, which offers a written abstract of knowledge from outcomes on the high of its search engine in response to consumer queries, confronted criticism final yr for producing some erratic responses.

On the time a Google spokesperson mentioned that these had been “remoted examples” and that the characteristic was usually working properly.